Procedural Image Programs for Representation Learning

|

Abstract

Learning image representations using synthetic data allows training neural networks without some of the concerns associated with real images, such as privacy and bias. Existing work focuses on a handful of generative processes which are hard to integrate together to scale up. To overcome this, we propose training with a large dataset of twenty-one thousand programs, each one generating a diverse set of synthetic images. These programs are short code snippets, which are easy to modify and fast to execute using OpenGL. The proposed dataset can be used for both supervised and unsupervised representation learning, and reduces the gap between pre-training with real and procedurally generated images by 38%.

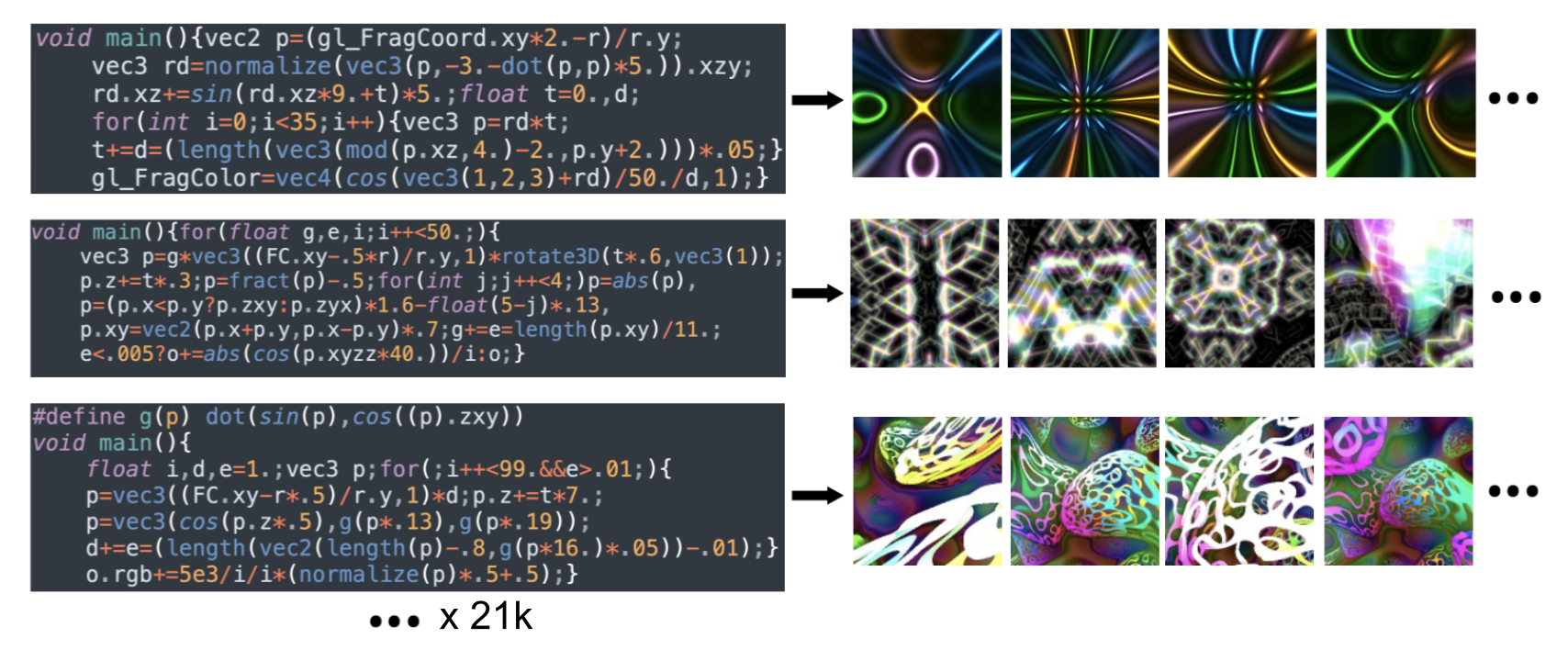

Image programs dataset

|

We collect 21 thousand OpenGL fragment shaders, which are small code snippets that generate a diverse set of images. We collect 1k of these programs from TwiGL and 20k from Shadertoy.

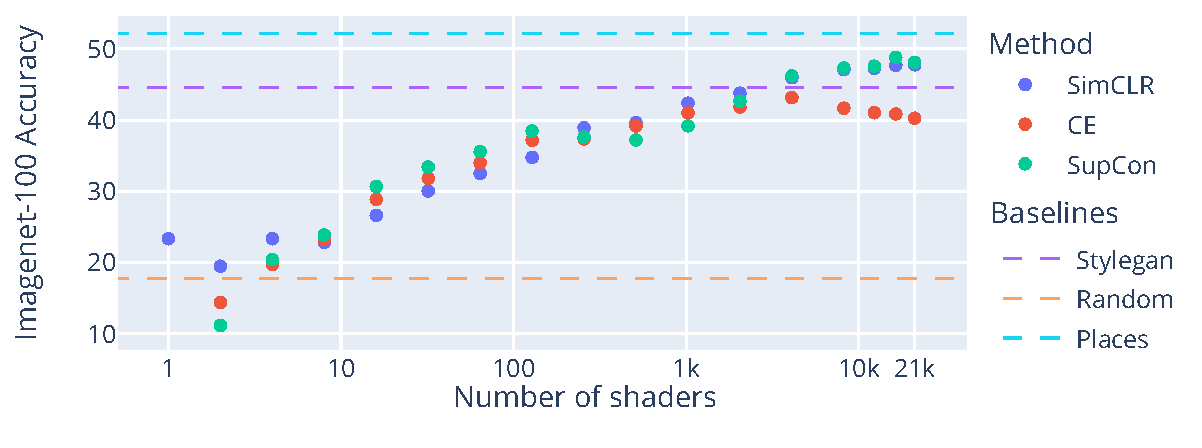

Scaling behavior

|

Imagenet-100 performance for contrastive learning (SimCLR), supervised classification (CE) and supervised contrastive learning (SupCon), with an AlexNet network trained with 100k images generated with an increasing number of shader programs, selected at random from Shaders 21k dataset.

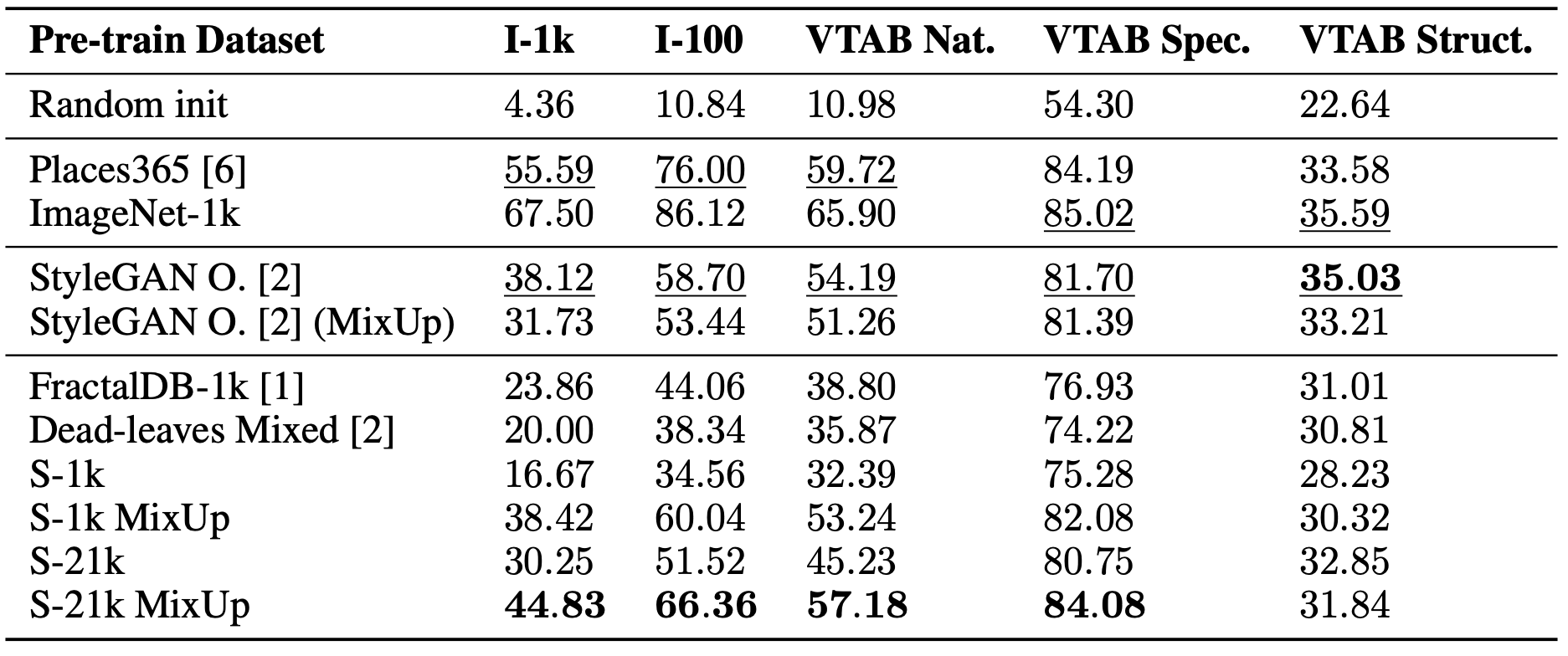

Performance

|

Performance of linear transfer on Imagenet-1k/100 and VTAB, for a ResNet50 pre-trained on different image models using MoCo-v2.

Datasets

Randomly selected samples for each type of novel training data proposed in the paper.

Feature visualizations

@inproceedings{

baradad2022procedural,

title={Procedural Image Programs for Representation Learning},

author={Manel Baradad and Chun-Fu Chen and Jonas Wulff and Tongzhou Wang and Rogerio Feris and Antonio Torralba and Phillip Isola},

booktitle={Advances in Neural Information Processing Systems},

editor={Alice H. Oh and Alekh Agarwal and Danielle Belgrave and Kyunghyun Cho},

year={2022},

url={https://openreview.net/forum?id=wJwHTgIoE0P}

}

Acknowledgements

Manel Baradad was supported by the LaCaixa Fellowship, and this research was supported by a grant from the MIT-IBM Watson AI lab. Rogerio Feris was supported in part by Darpa LwLL. Experiments were partially conducted using computation resources from the Satori cluster donated by IBM to MIT, and the MIT's Supercloud cluster.